Obviously the Vision Pro isn’t the first VR/AR/whatever device, but it’s the first one that’s got enough resolution to prevent me from getting pissed off as soon as I stick it to my face.

And while there have already been reams of articles published about other aspects of using the Vision Pro, I haven’t seen anybody yet discuss what it’s like to read a comic book with it. So… here you go.

To set the stage, I’ll have to say that I’ve never really loved reading comics on my laptop or iPad. It’s mostly fine, but it’s not the same as what you’ve got after your Wednesday comics run.

See, comics artists (writers and illustrators both) still do think about how the story is presented in a traditional paper format. And many times that includes how two pages, side-by-side, will tell the story. Sometimes that’s a two-page spread, sometimes it’s just knowing how the reader’s eyes will flow as they’re looking at the individual panels across those two pages.

If you’re reading on a tablet or a laptop, that two-page experience is compromised relative to a ‘real’ comic book. You either read a page at a time (on a vertically oriented iPad for example) in order to see it at a nice size, or else you put up the two pages on the screen at the same time and then they get… small.

Because unless you’ve got a display that’s at least 13 inches wide and a little more than 10 inches tall, you’re tinier than a standard comic. There’s a few monster laptops that get close, but I’ll never buy such a beast. And I’m also not going to go sit at my desk with the big monitor to read a comic book – comics are a laying-on-the-couch (or in bed) activity.

Enter Vision Pro. There are no limits to the page size because the screen is filling your field of view.

So far I’ve only tried out Apple’s ‘Books’ app and it’s got a few issues but overall it’s usable. To be clear, this isn’t yet a Vision Pro specific app – I’m just using the iPad version.

(And for some reason Volume 1 of the Sandman series was only $3.99 so that was a nice quick purchase for purposes of testing, even though I already own several other versions of it.)

To use, just open the Books app, drag it to a comfortable size and… boom. It’s a big version of the comic hovering in space in front of you. But the interaction is, at this point, really fiddly. Page turns are apparently supposed to work by touching your thumb to finger and then dragging but I would often experience lag and there were times where the page actually turned back instead of forward. And it kept getting into a mode where it would highlight a full page and then inexplicably give me a prompt for ‘copy’

I assume these issues will be worked out once there’s a native Books app, not to mention some of the other comics reading solutions out there that will undoubtedly show up soon. So I’m not going to dwell too much on problems I’m experiencing on day one.

But I will say that visually, it’s really really nice. It’s up to you to choose where you want to place the book in space (i.e. how far away it feels) as well as how large it is. For my first attempts I mostly ended up with something that felt like it was about 2 feet wide, positioned about the same distance in front of me.

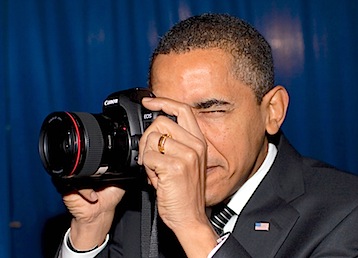

But I was also curious about zooming wayyy in, particulary if the source material supports it. So I did a quick scan of my own to test. And I’ve gotta say, it’s pretty fun to have something like this floating in front of you…

and then walk up and/or zoom until you can see this:

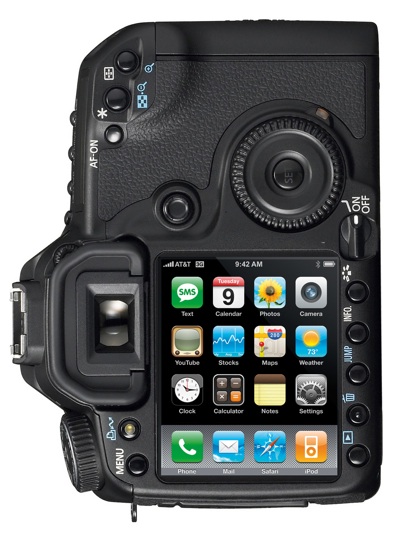

or even this:

(Yeah I know, this isn’t strictly speaking something you need a Vison Pro to do… but it’s a different feeling with grape-sized halftone dots within arm’s reach vs. just zoomed up on a flat display).

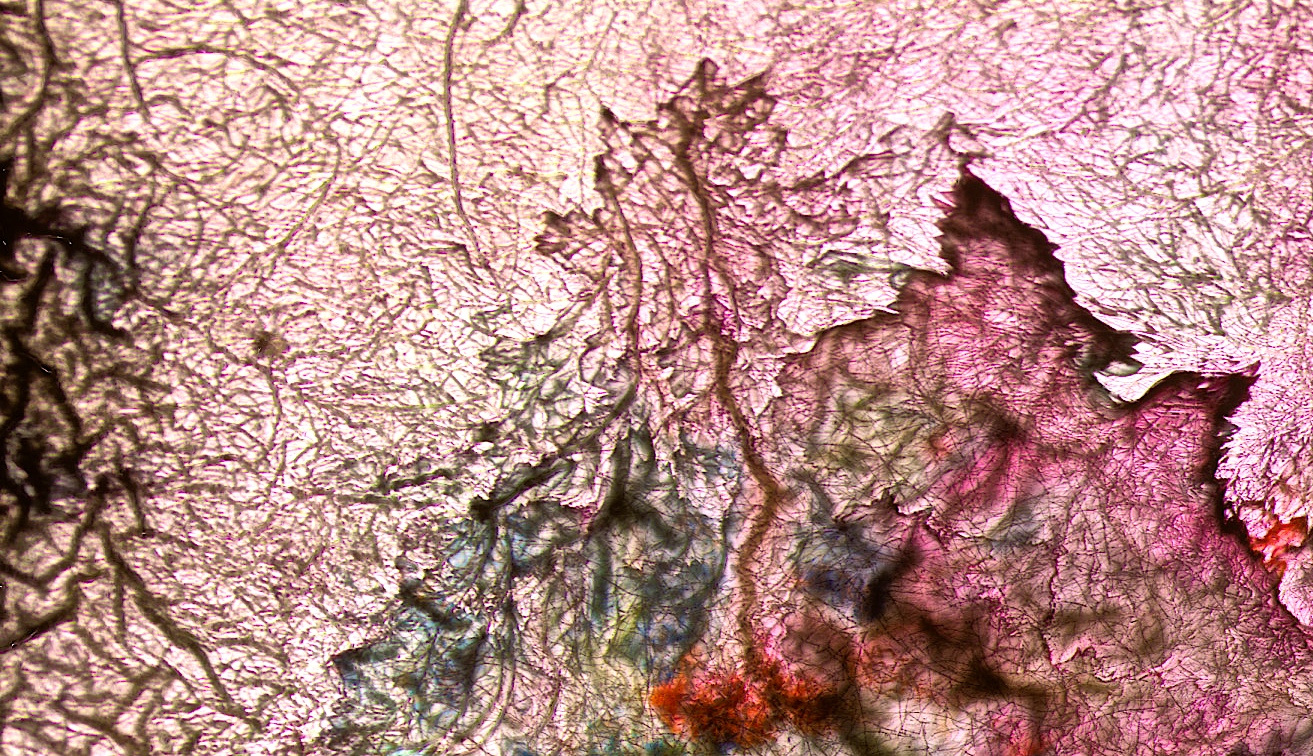

I also wondered about alternate methods of displaying things. Although the Books reader is just a planar 2-dimensional window, Apple does support an image-viewing mode for panoramic images that makes it very large and then curves the image around you.

So, just for grins, I went ahead and stitched a few comics pages side-by-side (including a double-page spread) and tossed it up to see how it would feel.

And I like this experience a lot, actually. The photo at the top of this post gives you a rough idea for what it’s like, but obviously you need to experience it directly. It’s extremely immersive… like you’re within the book instead of just looking at a page or two. I couldn’t find a way to adjust how big and how much curvature is in this mode – the default was probably more than I wanted really.

But it’s pretty neat to just look a little to your left if you want to go back a page or two to remind yourself of something you just read. On the other hand, you could argue that this scenario sort of derails the artist’s intended experience since you can now peripherally see the next two pages before you get to them…

Anyway, I’m sure there will be artists and developers experimenting with how best to view and interact with existing comics using this thing. And beyond that, there will also be new comics that take advantage of this unbounded space in new ways. Individual panels floating in space over massively detailed backgrounds, oddball aspect ratios, etc.

(I vaguely remember some comic (maybe by Mike Allred?) that opened ‘upwards’ vertically (i.e. stapled on the top) to get a really tall page. Hang on… googling… Ahhhh yes. The comic was actually called ‘Vertical’ and written by Steven T. Seagle with art by Allred. Published by Vertigo in 2004. Cool. I’ll have to go read that again with the top of the page towering over me.)

And someone go turn J.H. Williams III loose on this new boundary-less paradigm, because if there’s anybody who wants to break free of the page, it’s him.

Bottom line – I know I’ll always enjoy the tactile pleasure of actual paper in my hand, but I think this new spatial view is going to be a VERY fun alternative. If anybody’s got further questions about the comic-reading experience on this device (or has experience with other VR devices that they can share), please comment below!

ADDENDUM: Check out the comment below from Rotem Cohen, who has created a Vision Pro comic book reader that includes some of the stuff I talked about above. It’s really nicely done!